UPDATE 1 [2021/07/25]: It seems that Git LFS is able to resume your pushes after a network failure. At least it’s like that on Microsoft Azure DevOPS. So, it should be totally redundant to divide huge commits into smaller ones. How have I noticed this? Today, I pushed a huge single commit (around 53GBs) and it failed at 39GB due to a connection error without me noticing it for some time. A few hours later, when I made another attempt by issuing the push command again, it picked up and resumed the push at 39GB, which was really exciting.

UPDATE 2 [2021/07/25]: After pushing the repository to Azure DevOPS, if you find your self stuck in git pull without doing anything, the following command will fix the consecutive pulls:

$ git pull origin masterOr, alternatively:

$ git fetch origin master

$ git reset --hard FETCH_HEADUPDATE 3 [2021/07/28]: I’ve noticed due to the fact that the files modification times affect how Rsync and Git work by default, my approach in writing the original script was totally wrong, which in turn caused a bug where on each update it committed all tracked files over again causing huge bloat in the repository, despite the fact that the content of the files was unchanged. Thus, it led me to completely rewrite the script. Hopefully, the new script has been extensively tested with two repositories/projects and works as expected. In addition to that, the script now shows progress for every step, which is a nice addition in order to keep you informed and give an estimation of the time it is going to take to get the job done. And, last but not least, I have edited and improved the blog post a bit.

UPDATE 4 [2021/08/04]: Due to nested .gitignore files inside the Unreal Engine dependencies, I noticed tiny bits of dependencies for building UE4/UE5 on Microsoft Windows are not getting copied over to the repository. As a result, I fixed the script in order to also take care of that.

UPDATE 5 [2021/11/30]: Sometimes it’s possible that the amount of renamed Unreal Engine files surpass the Git’s optimal rename limit inside the Sync repository (the intermediary local git repository that we are going to use for syncing the engine source code with upstream):

warning: exhaustive rename detection was skipped due to too many files.

warning: you may want to set your diff.renameLimit variable to at least 13453 and retry the command.So, you could set that to a really large number in order to keep track of file renames:

$ cd ~/dev/MamadouArchives-Sync

$ git config diff.renameLimit 999999

$ git config merge.renameLimit 999999Note: You will get this warning only when the Git option diff.renames is set to true (default behavior). Likewise, the above settings does not have any effects when the copy/rename detection is turned off. Nonetheless, you can always check your settings with:

$ git config -lUPDATE 6 [2021/12/18]: I’ve added a step regarding EngineAssociation in the project’s .uproject file, which I forgot to mention in the original post.

UPDATE 7 [2023/03/03]: In UE5 UE4Games.uprojectdirs file shas been renamed to Default.uprojectdirs. Though the syntax and the contents of the file has remained the same.

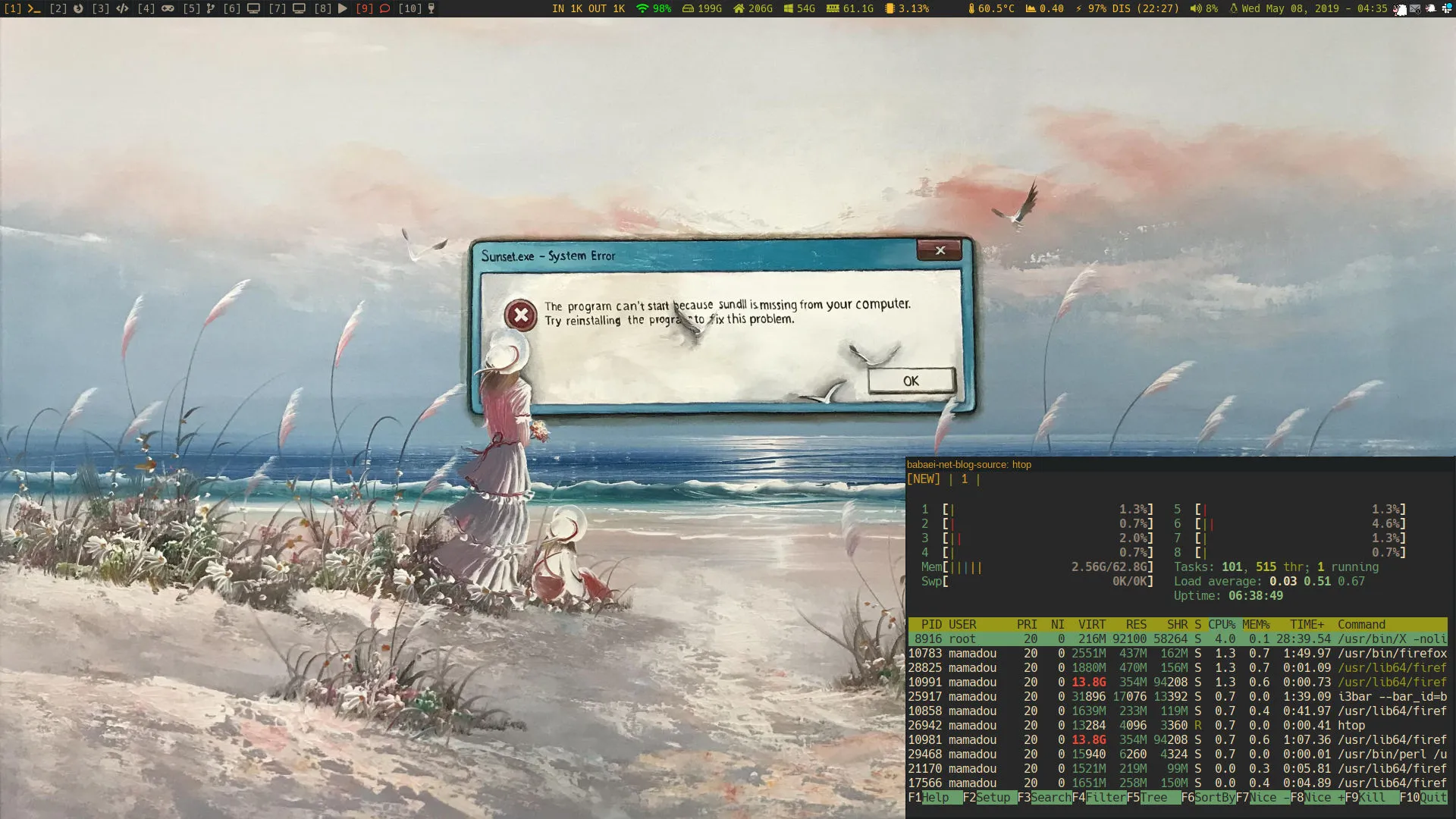

UPDATE 8 [2023/03/04]: After upgrading my project to Unreal Engin 5.1 despite the fact that I’ve already set the git configuration http.version to HTTP/1.1 as instructed in this article, despite the commit size of no bigger than 166.30 MB and the acceptable upload bandwidth I’ve got, I was getting HTTP 413 Request Entity Too Large error:

Enumerating objects: 190058, done.

Counting objects: 100% (164439/164439), done.

Delta compression using up to 16 threads

Compressing objects: 100% (113439/113439), done.

Writing objects: 100% (138834/138834), 166.30 MiB | 47.32 MiB/s, done.

Total 138834 (delta 35613), reused 121343 (delta 22206), pack-reused 0

error: RPC failed; HTTP 413 curl 22 The requested URL returned error: 413

send-pack: unexpected disconnect while reading sideband packet

fatal: the remote end hung up unexpectedly

Everything up-to-dateI tried every suggestion that I came across in order to debug and resolve the issue to no avail. Including enabling git verbose logging:

$ export GIT_TRACE_PACKET=1

$ export GIT_TRACE=1

$ export GIT_CURL_VERBOSE=1And then maxing out on all the size limits, buffer, packet sizes, and other hints:

[core]

compression = 0

packedGitLimit = 512m

packedGitWindowSize = 512m

[http]

postBuffer = 2147483648

[https]

postBuffer = 2147483648

[init]

defaultBranch = master

[pack]

deltaCacheSize = 2047m

packSizeLimit = 2047m

window = 1

windowMemory = 2047mThen I tried to change the origin URL to SSH:

[remote "origin"]

url = https://SOME-ORGANIZATION@dev.azure.com/SOME-ORGANIZATION/MamadouArchives/_git/MamadouArchives

#url = git@ssh.dev.azure.com:v3/SOME-ORGANIZATION/MamadouArchives/MamadouArchivesAnd then pushing without LFS:

$ git push --set-upstream origin 5.1 --no-verifyThis attempt was futile as well, that made me revert back to https. Then I tried to push commit by commit since I had made a few commits using (5.1 which has been repeated twice in the following command, is the name of the new local branch intended to be pushed):

$ git rev-list --reverse 5.1 | ruby -ne 'i ||= 0; i += 1; puts $_ if i % 1 == 0' | xargs -I{} git push origin +{}:refs/heads/5.1 --no-verifyAnd sadly, the approach of pushing one commit at a time was frutiless as well :/

Thus, for the time being I’m stuck pushing the updated project from my Linux machine and pulling it from my Windows machine. I’ll do another updated once I’ve figured what’s going wrong.

UPDATE 9 [2023/03/04]: As an experiment, I did create a new organiation and a new repository inside it. Then prior to changing the origin URL, I fetched all LFS objects issuing:

$ git lfs fetch --all

fetch: 78618 objects found, done.

fetch: Fetching all references...Then, I decided to first push the Git comnmits without the LFS objects, so after updating the origin URL inside the .git/config:

$ git push --set-upstream origin 5.1 --no-verify

Enumerating objects: 296578, done.

Counting objects: 100% (296578/296578), done.

Delta compression using up to 16 threads

Compressing objects: 100% (210384/210384), done.

Writing objects: 100% (296578/296578), 360.11 MiB | 5.78 MiB/s, done.

Total 296578 (delta 78143), reused 296578 (delta 78143), pack-reused 0

remote: Analyzing objects... (296578/296578) (77738 ms)

remote: Storing packfile... done (10303 ms)

remote: Storing index... done (3740 ms)

To https://dev.azure.com/SOME-ORGANIZATION/MamadouArchives/_git/MamadouArchives

* [new branch] 5.1 -> 5.1

branch '5.1' set up to track 'origin/5.1'¯\_(ツ)/¯ as unexpected as it seems, it worked! As you can see my actual Git objects without the LFS objects on this repository are in no way near the 10 GB size limit:_

$ git count-objects -vH

count: 0

size: 0 bytes

in-pack: 296583

packs: 1

size-pack: 366.76 MiB

prune-packable: 0

garbage: 0

size-garbage: 0 bytesThe issue might be that I’ve reached some kind of limit on the main organization that I’m not aware of.

Anyways, then I pushed the master and checked out back the new brnach for continuation on the upgrade:

$ git checkout master

$ git push origin master --no-verify

$ git checkout 5.1And, then proceeded to pushing all LFS objects:

$ git lfs push origin --allUPDATE 10 [2023/03/05]: Yesterday, I removed a large redundant repository from the previous organization, in order to see if I could still push my updates and the error I am getting was not due to hitting some kind of ceiling limit. It didn’t work. I did also cleanup the limit hacks I’ve added to my ~/.gitconfig in UPDATE 8. Then, after successfully pushing to the new organization/repository, I’ve decided to revert back the URL section for the orgin inside my .git/config inside the local repository and try to push once more to the old repository and guess what? It worked! Weird Microsoft/Azure! Not sure what fixed the issue. It could be even I had to wait for Microsoft to clean up the repository’s space I’ve deleted if the organization size limit was the issue. Don’t really know.

Among the gamedev industry, it’s a well-known fact that Unreal Engine projects sizes have always been huge and a pain to manage properly. And it becomes more painful by the day as your project moves forward and grows in size. Some even keep the Engine source and its monstrous binary dependencies inside their source control management software. In case you are a AAA game development company or you are working for one, there’s probably some system in place with an unlimited quota to take care of that. But, for most of us indie devs, or individual hobbyists, it seems there are not lots of affordable options, especially that your team is scattered across the globe.

There are plenty of costly solutions to keep UE4 projects under source control; ranging from maintaining a local physical server or renting a VPS with plenty of space on the cloud, equipped with a self-hosted Git, SVN, or Perforce, to use cloud SCM providers such as GitHub, GitLab, BitBucket, or Perforce. Since I prefer cloud SCM providers and Git + Git LFS (which also supports file locking), let’s take a look at some popular ones such as GitHub and GitLab.

GitHub for one, provides data packs, but the free offering is far from enough for collaborative UE4 projects:

Every account using Git Large File Storage receives 1 GB of free storage and 1 GB a month of free bandwidth. If the bandwidth and storage quotas are not enough, you can choose to purchase an additional quota for Git LFS. Unused bandwidth doesn’t roll over month-to-month.

…

Additional storage and bandwidth is offered in a single data pack. One data pack costs $5 per month, and provides a monthly quota of 50 GB for bandwidth and 50 GB for storage. You can purchase as many data packs as you need. For example, if you need 150 GB of storage, you’d buy three data packs.

For GitLab, although the initial generous 10GB repository size is way beyond the 1GB repository size offer by GitHub, the LFS pricing is insanely high:

Additional repository storage for a namespace (group or personal) is sold in annual subscriptions of $60 USD/year in increments of 10GB. This storage accounts for the size calculated from Repositories, which includes the git repository itself and any LFS objects.

When adding storage to an existing subscription, you will be charged the prorated amount for the remaining term of your subscription. (ex. If your subscription ends in 6 months and you buy storage, you will be charge for 6 months of the storage subscription, i.e. $30 USD)

Well, before this all get you disappointed, let’s hear the good news from the Microsoft Azure DevOPS team:

In uncommon circumstances, repositories may be larger than 10GB. For instance, the Windows repository is at least 300GB. For that reason, we do not have a hard block in place. If your repository grows beyond 10GB, consider using Git-LFS, Scalar, or Azure Artifacts to refactor your development artifacts.

Before we proceed any further, there are some catches to consider about Microsoft Azure DevOPS:

1. The maximum Git repository size is 10GB, which considering that we keep binary assets and huge files in LFS, is way beyond any project’s actual needs. For Git LFS it seems that Microsoft since at least 2015 has been providing unlimited free storage. For comparison, the engine source code for 4.27 is 1.4GB, which in turn when it’s getting committed to the git repo becomes less than 230MB:

$ cd /path/to/ue4.27/source

$ du -h

1.4G .

$ git init

$ git add .

$ git commit -m "add unreal engine 4.27 source code"

$ git count-objects -vH

count: 97545

size: 900.25 MiB

in-pack: 110815

packs: 1

size-pack: 227.80 MiB

prune-packable: 97545

garbage: 0

size-garbage: 0 bytes2. The maximum push size is limited to 5GB at a time. The 5GB limit is only for files in the actual repository and it won’t affect LFS objects. Thus, there are no limits for LFS objects’ pushes. Despite that, if your internet connection is not stable, you could divide your files into multiple commits and push them separately. For example, the initial git dependencies for UE 4.27 is around 40GB spanned across ~70,000 files. Instead of committing and pushing a 40GB chunk all at once, one could divide that into multiple commits and push those commits one by one using the following command:

$ git rev-list --reverse master \

| ruby -ne 'i ||= 0; i += 1; puts $_ if i % 1 == 0' \

| xargs -I{} git push origin +{}:refs/heads/master3. Sadly, at the moment Azure DevOPS does not support LFS over SSH. So, you are bound to git push/pull over https, which for some might be annoying. Especially, that it keeps asking for the https token 3 consecutive times on any push or pull!

Q: I’m using Git LFS with Azure DevOps Services and I get errors when pulling files tracked by Git LFS.

A: Azure DevOps Services currently doesn’t support LFS over SSH. Use HTTPS to connect to repos with Git LFS tracked files.

4. Last but not least, there is an issue with the Microsoft implementation of LFS, which rejects large LFS objects and spits out a bunch of HTTP 413 and 503 errors at the end of your git push. It happened to me when I was pushing 40GB of UE4 binary dependencies. The weird thing was I tried twice and both times it took a few good hours till the end of the push operation and based on measuring the bandwidth usage, the LFS upload size appeared to be more than the actual upload size. According to some answers on this GitHub issue and this Microsoft developer community question, it seems the solution is running the following command inside the root of your local repository, before any git pull/push operations:

$ git config http.version HTTP/1.1Well, not only it did the trick and worked like a charm, but also the push time on the following git push dropped dramatically to 30 minutes for that hefty 40GB UE4 binary dependencies.

OK, after getting ourselves familiarized with all the limits, if you deem this solution a worthy one for managing UE4 projects along with the engine source in the same repository, in the rest of this blog post I’m going to share my experiences and a script to keep the engine updated with ease using a Git + LFS setup.

[Read More...]